Meta unveiled an AI model with the ability to physically perceive the world

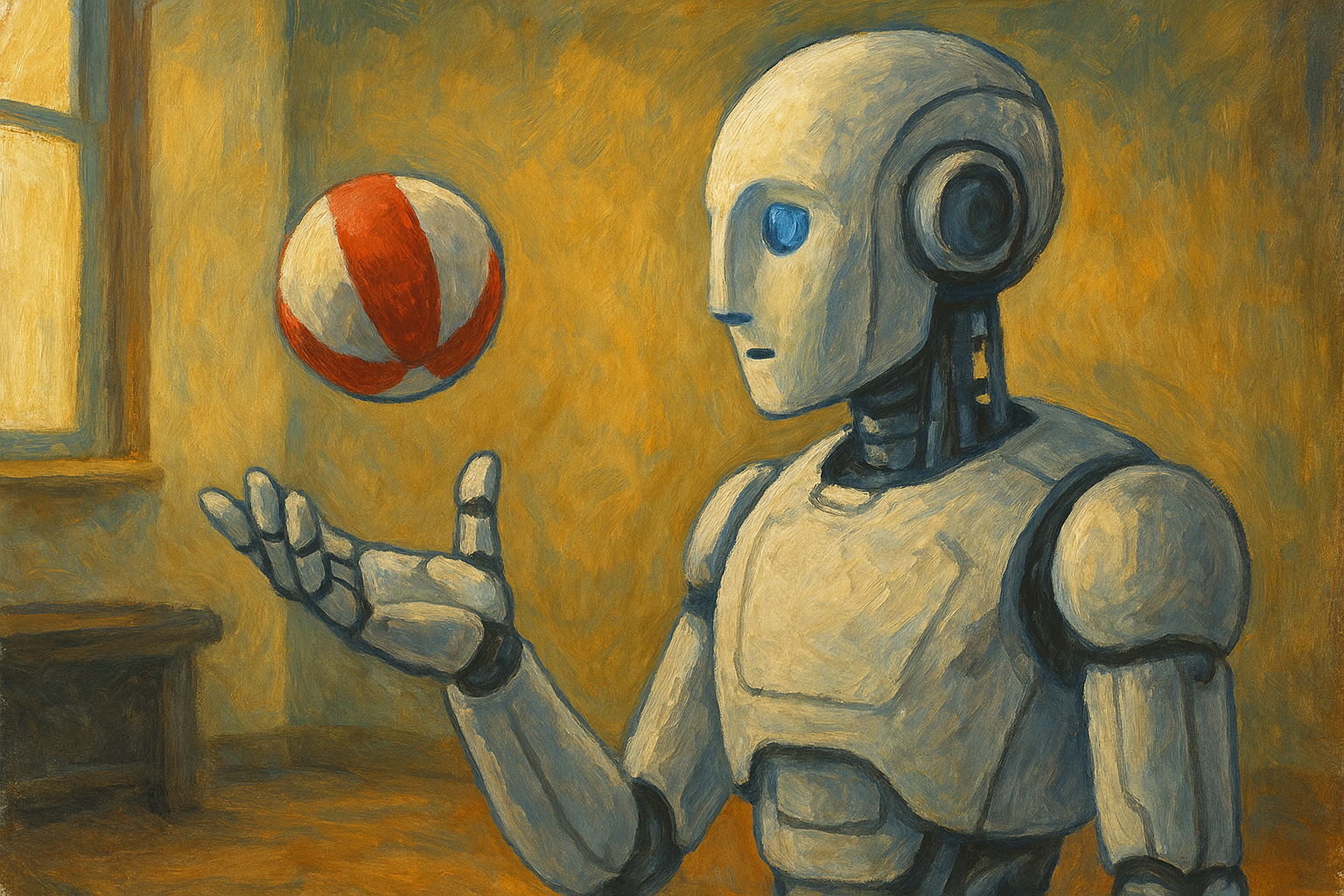

Meta has unveiled the second version of its V-JEPA model, focused on teaching AI common sense and understanding of the physical world. The new model is classified as a “world model” and allows agents to predict events based on visual context without relying on specialized sensors or specific tasks.

V-JEPA 2 expands on last year’s version and is trained on more than one million hours of video content. This allows the AI to form expectations about physical laws – for example, anticipating how a ball moves or how objects in a kitchen interact. This predictive ability should make robots more effective in real-world environments.

In the demonstration, Meta describes how the AI recognizes a scene: a person carries a spatula and a plate to a stove with eggs. The system infers that the next action is to put the eggs on the plate. This illustrates the model’s ability to build logical chains similar to human or animal intuitive thinking.

Meta also claims that the V-JEPA 2 is 30 times faster compared to Nvidia’s Cosmos model. However, it is worth keeping in mind that companies may use different evaluation standards, and a direct comparison may not be correct.

Yann Lecun, Meta’s chief AI scientist, said that world models will be a game changer in robotics. According to him, they will make it possible to do without huge datasets by training agents to act based on a common view of reality. Meta is betting that such AIs will be able to perform mundane tasks without manual fine-tuning.